Load Sharing Containers

Load sharing containers allow you to identify blocks or paths that are in load sharing redundancy. Each path in the container consists of one or more blocks (connected in series if there is more than one block in the path). Two or more paths in the container share the responsibility for keeping the system running properly; if one of them fails, the other(s) can take on an increased "load" so the system can continue to operate. Therefore, load sharing components exhibit different failure characteristics when one or more fail.

For example, suppose that two heat dissipation devices operate in a load sharing configuration and together they are expected to provide dissipation for a temperature difference of 100 K of heat. When both units are operating, they share the load and each dissipates 50% of the heat. If one unit fails, then the load on the other unit is increased to 100% of the heat.

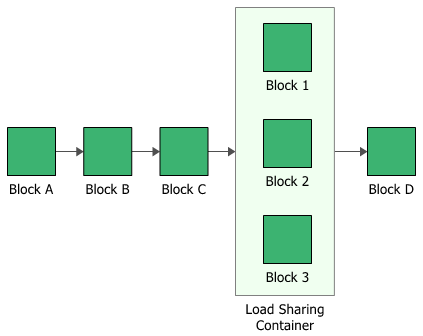

In the figure shown next, Blocks 1, 2 and 3 are in a load sharing container and have their own failure characteristics. If the container's properties specify that only one contained block must succeed in order for the container to succeed, then all three contained blocks must fail for the container to fail. However, as individual contained blocks fail, the failure characteristics of the remaining contained blocks change because they now have to carry a higher load to compensate for the failed ones.

The container has properties that describe the way the container behaves. A block inside a container, a contained block, also has its own block properties.

To configure a load sharing container, in addition to the common block properties, you will need to specify the Number of paths required, which is the number of active paths within the container that must succeed in order for the container to succeed. If the required number of paths does not succeed, then the container is considered to be failed. The contained blocks must be within the load sharing container, but they do not need to be connected to each other.

Additional options are available for the load sharing container, including:

- Set block as failed if selected, indicates that the container is failed (i.e., the block is "off" or absent from the system). An X will be displayed on the container to indicate that it is failed. The block will be considered to be failed throughout the entire analysis/simulation and no maintenance actions will be performed (i.e., any failure and maintenance properties will be ignored). This option can be used for "what-if" analyses to investigate the impact of a block on system metrics such as reliability, availability, throughput, etc. You can also set this option by selecting the block in the diagram and choosing Diagram > Settings > Set Block as Failed.

![]()

When this option is selected, no other properties will be available for the block; note, however, that any properties you have already specified are simply hidden because they are not relevant. The settings will reappear if you clear the Set block as failed option.

- Adjust Numerical Convergence Settings opens the Algorithm Setup window, which allows you to modify the parameters of the numerical integration that is used in analyzing the container, as well as to specify a level of accuracy that should be reached during calculations. This option is available only for analytical diagrams.

For load sharing containers in simulation diagrams, you can also specify throughput properties, which define the amount of output that can be processed by the block in a given period of time.

What's Changed? Version 7 required a life-stress relationship for load sharing configurations and based the re-calculation of load after block failure on that relationship; subsequent versions calculate load using the weight proportionality factor as a multiplier. Because of this, if you convert a Version 7 diagram that uses load sharing containers, you will need to manually configure the contained load sharing blocks after conversion.

The ReliaWiki resource portal has more information on load sharing configurations at: http://www.reliawiki.org/index.php/Time-Dependent_System_Reliability_(Analytical).